Bayesian Learning about a Hitting Probability

I’ll be on the road soon, so I’ll be taking a break from the blog for about a month. For those of you learning data science and have some free time, there is a nice resource called DataCamp which offers short courses on a wide range of data science and modeling topics. I’ve just completed my own DataCamp course “Beginning Bayes” that will be available in the next month. I tried to put together a course where the only prerequisites are (1) some knowledge of R and (2) a little background in probability and regression. (I don’t do any calculus and there are few formulas.) The idea is to present Bayesian thinking to data scientists who don’t have strong math backgrounds.

I thought I’d introduce some of the ideas in my course and a new package TeachBayes that provides a number of helper functions for communicating Bayesian thinking. Of course, I’ll use baseball as my context.

Installing the TeachBayes Package

Although the package is on CRAN, I’d recommend installing the developmental version of the package from my github site:

library(devtools)

install_github("bayesball/TeachBayes")

Learning about a Hitting Probability

Here is an illustration of using discrete priors to learn about a player’s hitting probability. Suppose you are interested in estimating p, the probability of a hit, for a specific player. You think that values of .2, .21, .22, …, .34 are all plausible values of his hitting probability and you assign a uniform prior to these values where each value gets the same probability (here there are 15 values and so I assign a probability of 1/15 to each value). This prior indicates that I am pretty clueless about possible true batting averages.

I set up a data frame with the variable P the values of p, and the variable Prior has the prior probabilities.

bayes_df <- data.frame(P = seq(.2, .34, by=.01),

Prior = rep(1/15, 15))

Next we observe some data. My player gets to hit 100 times, getting 30 hits. This is binomial data with sample size 100 and probability of success P. The likelihood is the binomial probability of this outcome, viewed as a function of the hitting probability. I add the likelihoods to the table using the function dbinom .

bayes_df$Likelihood <- dbinom(30, size=100, prob=bayes_df$P)

What have we learned about the player’s hitting probability? We use Bayes’ rule — there is a special function bayesian_crank() for computing the posterior probabilities for P. (Bayesians use the “bayesian crank” phrase to express the straightforward way of revising opinion using Bayes’ rule.)

library(TeachBayes)

(bayes_df <- bayesian_crank(bayes_df))

P Prior Likelihood Product Posterior

1 0.20 0.06666667 0.005189643 0.0003459762 0.006441961

2 0.21 0.06666667 0.009298519 0.0006199013 0.011542353

3 0.22 0.06666667 0.015390090 0.0010260060 0.019103886

4 0.23 0.06666667 0.023665974 0.0015777316 0.029376831

5 0.24 0.06666667 0.033980304 0.0022653536 0.042180121

6 0.25 0.06666667 0.045753808 0.0030502538 0.056794699

7 0.26 0.06666667 0.057990835 0.0038660557 0.071984655

8 0.27 0.06666667 0.069414736 0.0046276491 0.086165268

9 0.28 0.06666667 0.078696286 0.0052464190 0.097686556

10 0.29 0.06666667 0.084715503 0.0056477002 0.105158276

11 0.30 0.06666667 0.086783865 0.0057855910 0.107725756

12 0.31 0.06666667 0.084766785 0.0056511190 0.105221933

13 0.32 0.06666667 0.079079112 0.0052719408 0.098161762

14 0.33 0.06666667 0.070565582 0.0047043722 0.087593825

15 0.34 0.06666667 0.060308919 0.0040205946 0.074862118

The prior_post_plot() function graphically compares the prior and posterior distributions for P.

prior_post_plot(bayes_df)

Before observing any hitting data, we were clueless about the guy’s hitting probability, but now it is more likely to be in a range of values about P = .300.

In fact, we can use the discint() function in the TeachBayes package to find a probability interval for P — the first input is a data frame (or matrix) with two variables (the first contains the values and the second the probabilities) and the second input is the probability content.

discint(select(bayes_df, P, Posterior), 0.6) $prob [1] 0.6015481 $set [1] 0.28 0.29 0.30 0.31 0.32 0.33

The probability the hitting probability falls between 0.28 and 0.33 is about 60 percent.

Using an Informative Prior

But of course our choice of prior was a bit lame. I know baseball and so I likely know something about the player’s hitting probability. We illustrate using an informative normal prior, and an approximate normal/normal Bayesian analysis here.

Suppose I’m interested in estimating Joey Votto’s 2016 batting probability before the beginning of the 2016 season. Looking at his season stats on Baseball-Reference, I see that his season AVG’s are generally in the .300 through .320 range. After some thought, I make the following statements:

- His 2016 hitting probability P is equally likely to be smaller or larger than .310 (that is the median is .310).

- It is unlikely (with probability 0.10) that his hitting probability is smaller than 0.280 — in other words my prior 10th percentile of P is .280.

The function normal.select() with find the mean and standard deviation of the normal prior that matches this information:

(normal_prior <- normal.select(list(p=.5, x=.320),

list(p=.1, x=.280)))

$mu

[1] 0.32

$sigma

[1] 0.03121217

I draw my normal(.320, .031) prior below using the special normal_draw() function.

normal_draw(c(.320, .031))

Next we observe Votto’s 2016 hitting performance — he batting .326 with 556 at-bats. Here the data estimate of his hitting probability is .326 with an associated standard error of sqrt(.326 * (1 – .326) / 556) = 0.0199.

The normal_update() function will compute the mean and standard deviation of the normal posterior given a normal prior and a normal sampling density (reasonable approximation here). The inputs are the prior (mean and standard deviation) and data (sample mean and standard error). The teach=TRUE option is helpful for seeing how the prior and data information get combined.

normal_update(normal_prior, c(.326, .0199), teach=TRUE)

Type Mean Precision Stand_Dev

1 Prior 0.3200000 1026.484 0.03121217

2 Data 0.3260000 2525.189 0.01990000

3 Posterior 0.3242659 3551.673 0.01677967

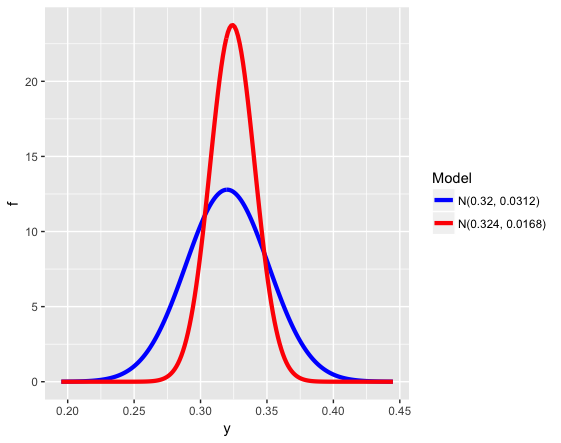

The posterior is normal with mean .324 and standard deviation 0.0168 — I use the many_normal_plots() function to compare the prior and posterior densities for P. This plot shows I am more confident (after seeing the 2016 season data) about Votto’s hitting probability.

many_normal_plots(list(c(.320, 0.0312), c(.324, 0.0168)))

Finally, I use the qnorm() function to construct a 90% probability interval for Votto’s 2016 hitting probability.

qnorm(c(0.05, 0.95), mean=.324, sd=0.0168) [1] 0.2963665 0.3516335

Try the Course?

I encourage you to try the Beginning Bayes class at DataCamp when it is available. Since this is new type of teaching experience for me, I’m very interested in any feedback. One thing that is fascinating about teaching is that your audience always changes and one needs to think and try out new methods or pedagogies to be effective in communicating statistics.

Recent Comments